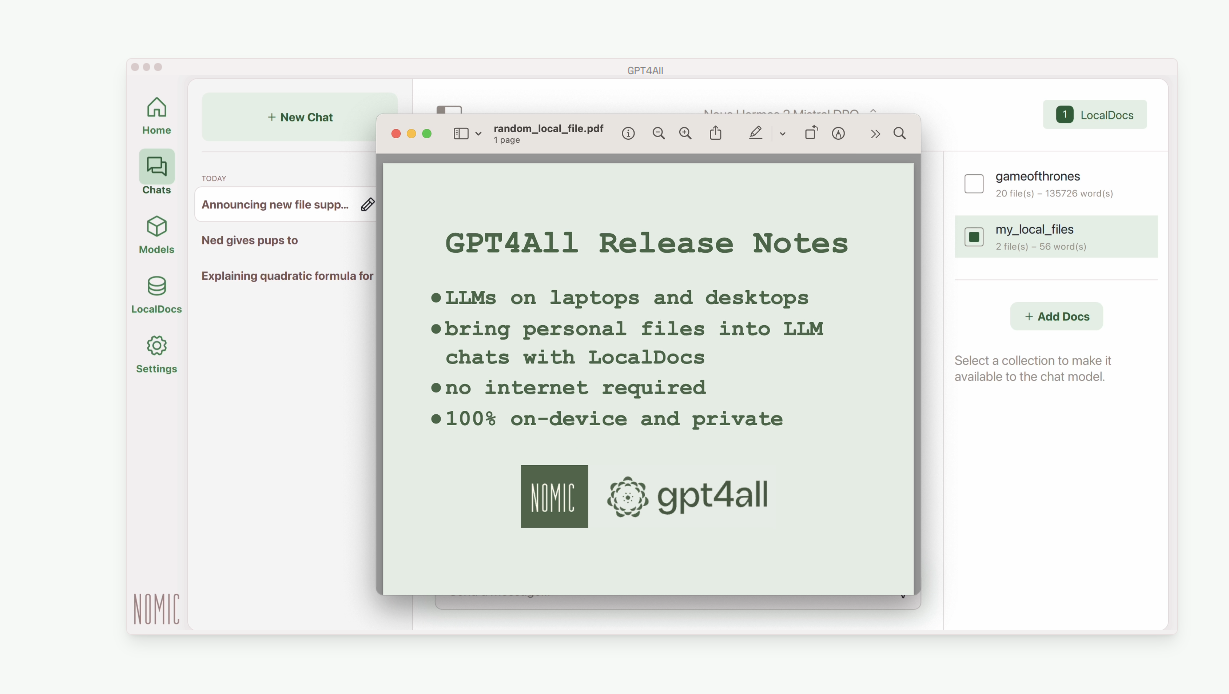

In a previous article, we explored local Retrieval-Augmented Generation (RAG) using LM Studio and AnythingLLM. Today, we’re diving into another powerful tool for local RAG: GPT4All.

RAG, in simple terms, is a technique that enhances AI models by retrieving relevant information from a knowledge base before generating responses. It’s like giving your AI a personalized library to reference during conversations.

GPT4All brings this capability to your desktop, allowing you to run large language models locally and privately while leveraging your own documents as a knowledge source. Let’s explore how GPT4All makes local RAG accessible and efficient for everyday users and developers alike.

GPT4All Desktop Application: Your Local AI Powerhouse

GPT4All is more than just another AI chat interface. It’s a comprehensive desktop application designed to bring the power of large language models (LLMs) directly to your device. Here’s what makes GPT4All stand out:

- Local Processing: Unlike cloud-based AI services, GPT4All runs entirely on your machine. This means faster response times and, crucially, enhanced privacy for your data.

- Model Flexibility: The application allows you to download and switch between various LLMs. Whether you prefer Llama 3 or want to experiment with other models, GPT4All has you covered.

- LocalDocs Feature: This is where GPT4All truly shines for RAG enthusiasts. LocalDocs lets you transform your personal files into a knowledge base for the AI, enabling context-aware conversations based on your own data.

- Cross-Platform Support: Whether you’re on Windows, Mac, or Linux, GPT4All has a version for you, ensuring accessibility across different operating systems.

- User-Friendly Interface: Despite its powerful features, GPT4All maintains a clean, intuitive interface that makes AI interactions approachable for both novices and experts.

Getting Started with GPT4All

Getting Started with GPT4All is straightforward:

- Download and install the application for your operating system from the official GPT4All website.

- Launch the app and click “Start Chatting”.

- Add a model by clicking “+ Add Model”. For beginners, Llama 3 is a solid starting point.

- Once your model is downloaded, navigate to the Chats section and load your chosen model.

- You’re now ready to start interacting with your local AI!

In the next section, we’ll dive deeper into setting up and using LocalDocs, the feature that brings RAG capabilities to your personal AI assistant.

LocalDocs: Chat with Your Documents Locally

Unlocking LocalDocs: Your Personal AI Knowledge Base

LocalDocs is GPT4All’s implementation of RAG, allowing you to enhance your AI interactions with information from your personal files. Here’s how it works and how to set it up:

Understanding LocalDocs:

LocalDocs uses an embedding process to create a searchable index of your documents. When you chat with the AI, it can then retrieve relevant information from this index to inform its responses. This means your AI can “read” and reference your documents, all while keeping your data entirely local and private.

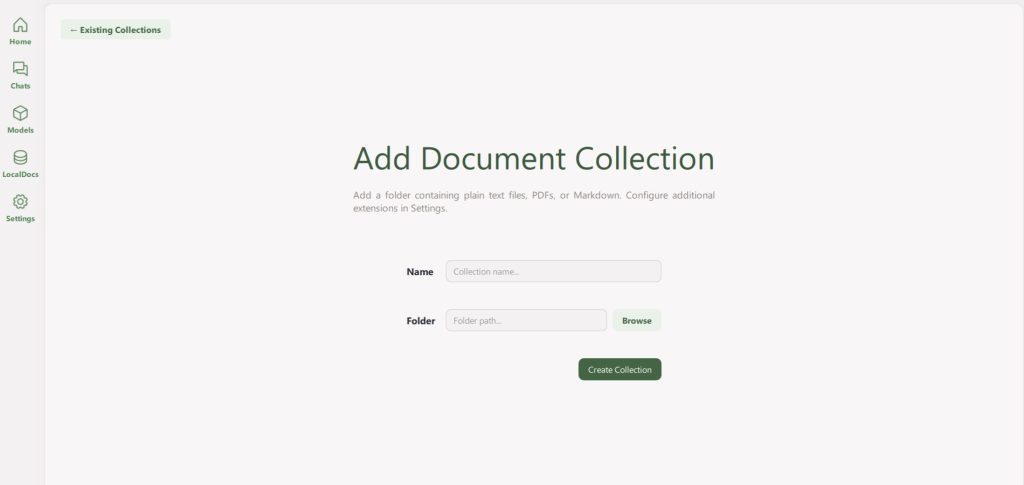

Setting Up LocalDocs:

- In the GPT4All interface, locate and click the “+ Add Collection” button.

- Name your collection and link it to a folder containing the documents you want to include. This could be a folder of work documents, research papers, or any text-based files you want the AI to reference.

- Click “Create Collection“. You’ll see a progress bar as GPT4All processes your documents.

- Once complete, you’ll see a green “Ready” indicator. Don’t worry if you have a large collection – you can start using LocalDocs with the files that are ready while the rest continue processing.

- To use LocalDocs in your chats, look for the LocalDocs button in the top-right corner of the chat interface. This toggles the use of your local knowledge base.

- When chatting, you can view the sources of the AI’s information by clicking “Sources” below its responses.

Embedding models:

The Magic Behind LocalDocs: LocalDocs uses Nomic AI’s embedding models to create vector representations of your text snippets. These vectors capture the semantic meaning of the text, allowing the system to find relevant information based on the similarity between your query and the embedded text.

When you ask a question, GPT4All:

- Embeds your query into a vector.

- Searches for similar vectors in your LocalDocs collection.

- Retrieves the most relevant text snippets.

- Includes these snippets in the prompt sent to the LLM.

- Generates a response based on both its training and the retrieved local information.

This process happens seamlessly and quickly, giving you AI responses informed by your personal knowledge base.

Tips for Effective LocalDocs Use:

- Organize your documents into themed collections for more targeted retrieval.

- Regularly update your collections to keep your AI’s knowledge current.

- Experiment with different types of documents to see what works best for your needs.

Hardware Requirements for local RAG:

GPT4All is designed to run efficiently on modern PCs, but having the right hardware ensures optimal performance. For smooth operation, aim for at least 16GB of RAM and a processor supporting AVX2 instructions.

A dedicated GPU with 8GB+ VRAM can significantly boost performance, especially for larger models and extensive LocalDocs collections.

For Windows and Linux users, any modern CPU with AVX2 support suffices, while macOS users need an Apple Silicon M1 chip or later running macOS 13.6+.

In the next section, we’ll explore some practical applications of GPT4All with LocalDocs, showcasing how this powerful combination can enhance your productivity and decision-making.Use Cases for Local RAG:

The combination of GPT4All’s local LLM capabilities and LocalDocs’ RAG functionality opens up a world of possibilities for both personal and professional use. Here are some practical applications:

Personal Knowledge Management

- Document Summarization: Quickly get summaries of long documents or research papers in your collection.

- Study Aid: Create a LocalDocs collection of your study materials and use GPT4All to quiz yourself or explain complex concepts.

- Personal Journal Analysis: Gain insights from your personal writings by creating a collection from your journal entries.

Professional Research

- Literature Review: Build a collection of academic papers and use GPT4All to find connections or summarize key findings across multiple sources.

- Patent Analysis: Create a collection of patent documents and use the AI to identify trends or potential infringements.

Business Intelligence

- Market Research: Analyze a collection of news articles, reports, and internal documents to extract market trends and competitive intelligence.

- Customer Feedback Analysis: Process large volumes of customer feedback and use GPT4All to identify common issues or sentiments.

Legal and Compliance

- Contract Analysis: Build a collection of legal documents and use GPT4All to quickly find relevant clauses or potential issues.

- Policy Compliance: Create a collection of company policies and regulations, then use the AI to check if new documents or proposals align with existing rules

Software Development

Content Creation

Personal Finance

Example Workflow: Market Research with GPT4All and LocalDocs

- Create a LocalDocs collection with industry reports, competitor analysis, and internal market data.

- Ask GPT4All: “What are the emerging trends in our industry based on the documents in my collection?”

- Follow up with: “How do our competitors’ strategies align with these trends?”

- Use the AI’s insights, backed by your own documents, to inform your market strategy.

The key advantage of using GPT4All with LocalDocs for these applications is the combination of AI capabilities with your private, curated knowledge base. This ensures that the AI’s responses are not only intelligent but also highly relevant and tailored to your specific context and needs.

Also read: Using Python for SEO: build a content analysis tool