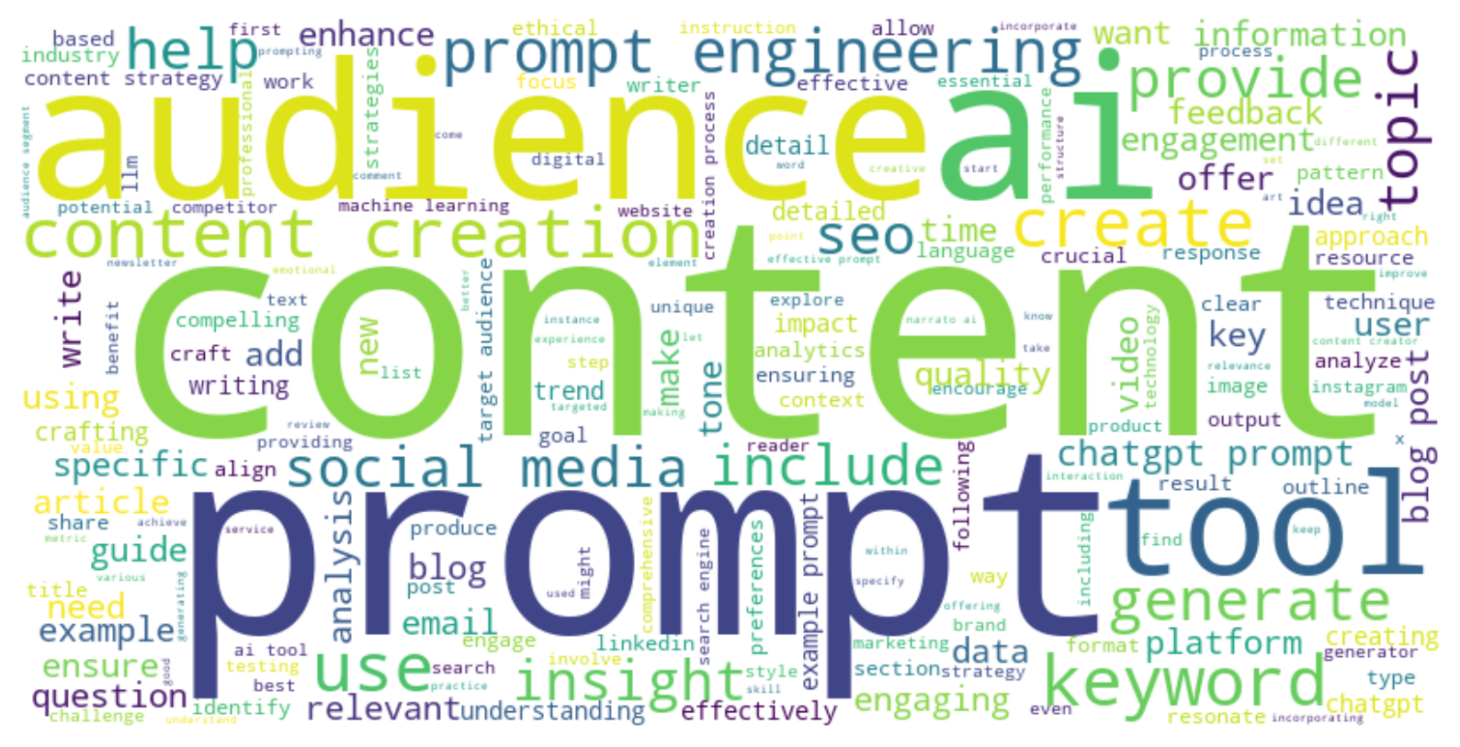

Essential Techniques for Effective Content Generation

Brief overview of large language models (LLMs) and their ability to generate content

Large language models (LLMs) are a type of artificial intelligence system that has been trained on vast amounts of textual data, allowing them to understand and generate human-like language. These models are capable of performing a wide range of natural language processing tasks, including text generation, translation, summarization, and question answering.

One of the most remarkable capabilities of LLMs is their ability to generate coherent and contextually relevant content on virtually any topic. By leveraging their extensive knowledge and understanding of language patterns, LLMs can produce high-quality written material, such as articles, stories, reports, and even code snippets, with minimal human input.

The Importance of Prompts

While large language models (LLMs) like GPT-4, Claude, and others are incredibly powerful and versatile tools, it’s crucial to understand that they do not actually comprehend information the way humans do. Instead, LLMs operate by recognizing patterns in the massive datasets they were trained on and using that pattern matching to generate human-like text outputs.

This has major implications for how we interact with and leverage these models. Unlike traditional software built on rules and logic, LLMs do not follow predefined algorithms. Their responses are probabilistic – they generate text by predicting the most likely next token (word or character) based on the patterns they have identified from their training data. The quality and relevance of their outputs are entirely dependent on the prompts or instructions provided by the user.

Well-crafted prompts that offer clear context, break down tasks into steps, provide relevant examples, and set appropriate constraints essentially put “guardrails” around the model’s text generation. They increase the likelihood that each token predicted will be accurate and relevant to the specified task. Explicit instructions and tangible references point the model towards the desired output in a way that mirrors how a human would approach the problem.

Conversely, prompts that are vague, lack specificity, or fail to appropriately frame and scoped the task can lead LLMs astray very quickly. Since each token prediction influences the next, ambiguity or imprecision tends to compound exponentially. The model’s responses become a game of prediction whack-a-mole, generating largely irrelevant, inconsistent, or even nonsensical text as it grasps for patterns that simply aren’t there.

The importance of effective prompting cannot be overstated. While LLMs are highly capable tools, they are essentially advanced pattern matchers. Prompts serve as the critical interface through which we convey context and intent to these models. Investing time and effort into prompt engineering is essential to unlocking the full potential of LLMs and ensuring their outputs are accurate, relevant, and valuable for the task at hand.

Understanding LLM Prompts

What is a prompt?

In the context of large language models (LLMs), a prompt is a piece of input text that serves as a starting point or instruction for the model to generate content. Prompts can be as simple as a single word or phrase, or as complex as a multi-paragraph description, depending on the task and the desired output.

Types of prompts

There are several types of prompts that can be used with LLMs, each with its own strengths and applications:

- Text-based prompts: These are the most common type of prompts, where the user provides a textual description or context to guide the LLM’s output. For example, “Write a short story about a time traveler” or “Explain the concept of quantum entanglement in simple terms.”

- Few-shot prompts: In this approach, the user provides a few examples of the desired output, along with the prompt. This can help the LLM better understand the task and generate more relevant content. For instance, providing a few examples of well-written product descriptions before asking the LLM to generate new ones.

- Chain-of-thought prompts: These prompts encourage the LLM to break down complex tasks into a step-by-step reasoning process, making its thought process more transparent and easier to follow. This can be particularly useful for problem-solving or decision-making tasks.

- Constrained prompts: These prompts include specific constraints or guidelines for the LLM to follow, such as word count, tone, style, or format. For example, “Write a 500-word blog post in a conversational tone on the benefits of meditation.”

Importance of clear and well-structured prompts

Regardless of the type of prompt used, clarity and structure are essential for effective LLM content generation. Well-crafted prompts provide the necessary context, instructions, and constraints to guide the LLM in generating relevant, coherent, and high-quality output.

Unclear or ambiguous prompts can lead to confusing, off-topic, or irrelevant responses from the LLM, defeating the purpose of using these powerful language models. Additionally, poorly structured prompts may result in incoherent or disjointed output, making it difficult for the user to extract meaningful information or achieve their desired goals.

Clear and well-structured prompts should:

- Provide sufficient context and background information relevant to the task.

- Specify the desired tone, style, and format of the output.

- Include any necessary constraints or guidelines (e.g., word count, topic focus, etc.).

- Use concise and unambiguous language to avoid confusion.

- Break down complex tasks into smaller, manageable steps (if applicable).

By understanding the different types of prompts and the importance of clarity and structure, users can effectively harness the power of LLMs to generate high-quality and relevant content across a wide range of applications.

Read also: Running LLMs locally

Strategies for Effective Prompting

To get the most out of large language models and generate accurate, relevant and valuable outputs, it’s important to apply strategic prompting techniques. Based on the latest research and best practices, there are several key strategies that can greatly improve an LLM’s performance:

Break tasks down into subcomponents and steps.

Because LLMs struggle with abstraction beyond their training data, it’s helpful to decompose complex tasks into a sequence of concrete steps. Rather than an open-ended prompt like “Write a short story”, you could break it down into components like “Introduce the main character”, “Describe the setting”, “Outline the central conflict”, etc. This structured approach leverages the models’ robust pattern matching capabilities.

Provide relevant context and examples.

LLMs don’t have true understanding – they predict based on patterns from their training data. Giving relevant contextual details like background information, examples, constraints, etc. increases the likelihood the model will generate an accurate and appropriate response. For example, rather than “Suggest names for a new product”, it’s better to provide the product details and sample names.

Be explicit with instructions and constraints.

Clear and specific prompts reduce ambiguity. Don’t be afraid to over-explain the task, objectives, emphasis, format, etc. An explicit prompt like “Rewrite in a professional, concise tone for academics” gives the model much clearer guardrails than simply saying “Edit this”.

Ask for multiple options to compare.

Since LLMs are essentially predicting text probabilistically, getting several options enables you to scrutinize and cherry-pick the best ones. You can ask for 5 brief introductions, 10 new product names, etc.

Use characters/personas for unique perspectives.

Assigning the model to play a role like “Respond as a midieval monk describing this process” or “Explain like a Gen Z influencer” can yield more creative, specialized and contextual responses.

Specify desired format details.

Declaring upfront constraints like using bullet points, a certain tone, reading level or response length helps produce more relevant and digestible outputs aligned with your needs.

Experiment and iterate.

There’s no one-size-fits-all for prompting – minor variations can significantly impact outputs. Treat prompts like hypotheses to test and tweak based on the results. Tenacious experimentation is key to finding optimal prompts.

By combining these prompting strategies, you can provide the guidance and context that maximizes the impressive capabilities of large language models for your specific needs. With practice, prompt engineering becomes an invaluable skill for rapidly extracting value from AI.

Advanced Prompting Techniques

While the best practices discussed in the previous section lay a solid foundation for effective prompting, there are several advanced techniques that can further enhance the quality and relevance of LLM-generated content. These techniques include few-shot learning, chain-of-thought prompting, and prompt engineering.

Few-shot Learning

Few-shot learning is a prompting technique that involves providing the LLM with a small number of examples of the desired output, in addition to the prompt itself.

- Providing examples of desired output: By showing the LLM a few examples of the type of content you want it to generate, you can help it better understand the task and the expected format, style, and tone of the output. For instance, if you want the LLM to write a product review, you could provide a couple of well-written product reviews as examples.

- Strengths and limitations: Few-shot learning can be particularly useful when the task or domain is specific or when the desired output requires a certain structure or style. However, it’s important to note that the quality of the examples provided can significantly impact the performance of the LLM. Additionally, this technique may not be as effective for more open-ended or creative tasks where a diverse range of outputs is desired.

Chain-of-Thought Prompting

Chain-of-thought prompting is a technique that encourages the LLM to break down complex tasks or problems into a step-by-step reasoning process, making its thought process more transparent and easier to follow.

Encouraging step-by-step reasoning:

By prompting the LLM to “think aloud” and explain its reasoning step-by-step, you can gain insights into its decision-making process and better understand how it arrives at a particular solution or output. This can be particularly useful for tasks that involve problem-solving, decision-making, or logical reasoning.

Applications:

Chain-of-thought prompting has various applications, including:

- Problem-solving: This technique can be used to guide the LLM through complex problems, helping it break them down into smaller, more manageable steps and arrive at a solution in a logical and transparent manner.

- Decision-making: By encouraging the LLM to explicitly consider different options and weigh the pros and cons, chain-of-thought prompting can assist in making well-informed decisions.

- Explainable AI: By making the LLM’s reasoning process more transparent, this technique can contribute to the development of more explainable and trustworthy AI systems.

Prompt Engineering

Prompt engineering is the practice of optimizing prompts for specific tasks or domains, often by incorporating domain knowledge or external data.

- Optimizing prompts for specific tasks or domains: Different tasks or domains may require tailored prompting strategies to achieve the best results. Prompt engineering involves experimenting with different prompt structures, wording, and approaches to find the most effective way to elicit the desired output from the LLM for a given task or domain.

- Incorporating domain knowledge or external data: To enhance the LLM’s understanding and generate more accurate and relevant content, prompt engineers may incorporate domain-specific knowledge or external data into the prompts. This could involve providing the LLM with relevant facts, terminology, or background information specific to the domain, or even fine-tuning the LLM on domain-specific data to improve its performance.

Prompt engineering is an evolving field that requires a deep understanding of LLMs, the specific task or domain, and the principles of effective prompting. By continuously experimenting and refining prompting strategies, researchers and practitioners can unlock new capabilities and push the boundaries of what LLMs can achieve.

While advanced prompting techniques like few-shot learning, chain-of-thought prompting, and prompt engineering can significantly enhance the quality and relevance of LLM-generated content, it’s important to note that they may require more effort and expertise to implement effectively. Additionally, these techniques should be used in conjunction with the best practices discussed earlier, such as providing context and ensuring clear and well-structured prompts.

Benefits of Mastering Prompt Engineering

While large language models are incredibly capable and powerful tools, their usefulness is inextricably linked to our ability to communicate with them effectively. Mastering the skills of prompt engineering – crafting well-structured prompts that provide clear context and instructions – is essential to unlocking the full potential of these AI systems. There are several key benefits to developing this expertise:

Maximize LLM's Performance on Your Specific Tasks

At their core, LLMs are pattern matching machines, drawing upon the data they were trained on to generate relevant, human-like text. However, providing ambiguous or open-ended prompts leaves too much open to interpretation, resulting in outputs that miss the mark. With strategic prompt engineering, you can guide the model directly towards your desired outcome for any given task, significantly boosting its accuracy and performance. Clear prompts act like precision inputs into a sophisticated text generation engine.

Unlock Their Full Versatility as Productive AI Assistants

The most advanced LLMs are incredibly multi-talented, capable of everything from analysis and coding to ideation and creative writing with a simple tweak of the prompt. However, extracting maximum value requires mastering prompting techniques tailored to each potential use case. With the right framing and structuring, you can unlock the full versatility of these models to serve as powerful AI co-pilots and force multipliers across virtually any domain.

Facilitate More Natural and Contextual Interactions

Interacting with LLMs can often feel robotic and transactional – not surprising given they are statistical text models rather than sentient beings. However, effective prompt engineering bridges this gap by providing relevant context, specificities and even persona framing that facilitates a more natural interactive experience. You can push the boundaries of human-like dialogue and problem-solving. Rather than a black box spitting out responses, LLMs become contextualized assistants tailored to your personalized needs.

As LLMs continue to advance and proliferate across fields, prompt engineering skills will only become more vital and valuable. Those who invest time into mastering these strategies will gain a decisive edge in terms of maximizing an LLM’s performance, unlocking its full capabilities as an AI collaborator, and enabling more constructive human-machine exchanges.